In this short post, we will delve into issues you may encounter when trying to create Unity-compatible, single-node clusters in Azure Databricks using a linked service connector in Azure Data Factory. A workaround is provided as well.

Scenario

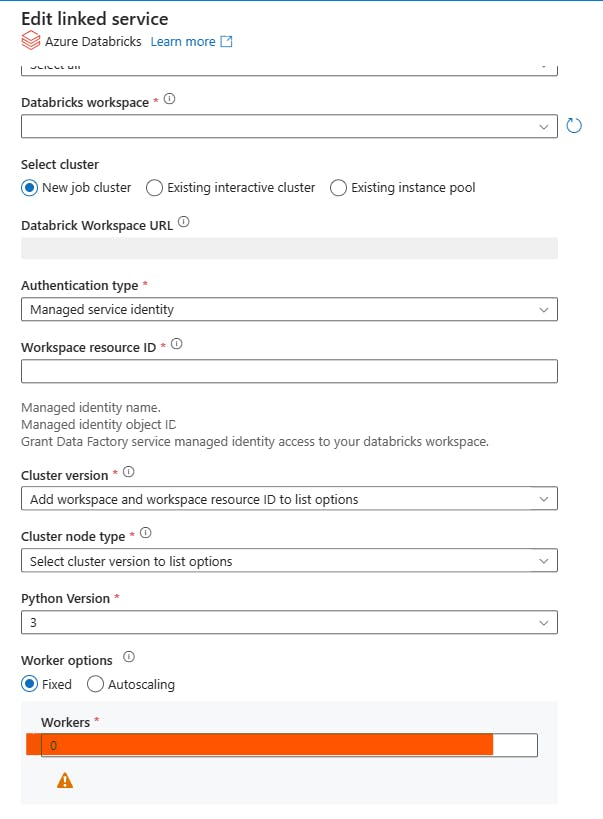

Let's say that you are orchestrating your Databricks notebooks with an Azure Data Factory task. You want to use Databricks job clusters (non-interactive clusters) when running those jobs. Moreover, in your Databricks jobs you are relying on reading and writing tables to the Unity Catalog. Thus, your clusters must be able to use Unity Catalog inside Databricks (i.e. 'Unity-enabled'). In Azure Data Factory, you configure a linked service to a Databricks workspace. This is enough to get your notebooks to be called from the ADF and executed on the job clusters just fine.

At some point, you notice that some jobs are small enough to run on a single node cluster, which may allow you to save a bit on your cloud compute spend. You naturally start in the linked service UI in ADF by setting the worker count to 0.

You may even go as far as to set additional spark configuration via the linked service spark.databricks.cluster.profile": "singleNode"

You test your changes, however, all your jobs in Databricks are now failing. ADF sends you to run pages in Databricks for you to discover this error:

What happened?

Problem

As it turns out, setting the workers count to 0 in ADF's linked services, causes Databricks to spin up job clusters in a Custom data access mode. That data access mode is incompatible with Databricks Unity Catalog. For a compute cluster to have Unity Catalog support, it must be created in one of the two supported access modes:

Single Useraccess mode, in which a cluster is assigned and used by a particular user (or service principal / managed identity).Sharedaccess mode, in which a cluster can be used by multiple users with data isolation among users.

To our dismay, we discover that the ADF linked service UI does not allow us to explicitly set the data access mode of a job cluster in Databricks!

Workaround

To solve our predicament we have to find an indirect way to set the desired compute cluster settings when executing Databricks task via ADF's linked service. The following solution is proposed:

In Step 1, we will define a custom cluster policy in Databricks. The purpose of the policy is to force cluster attributes that we can't manipulate in the Azure Data Factory linked service connector (namely, the data access mode).

In Step 2, you will see how to reference a Databricks compute cluster policy in the Azure Data Factory linked service. This will apply the policy each time a new job cluster is requested via the ADF's linked service.

Step 1 - Databricks Cluster Policies

The first step of our workaround will be to define a new job cluster policy that will enforce specific values of cluster properties, which can't be set in the linked service in the Azure Data Factory.

Cluster policies can be created in a Databricks workspace and can impose certain limitations, and/or fixed values, on compute attribute values that workspace user can set and use for their clusters.

Can Use privileges on the new custom policy created.Databricks allows us to define the following policy types on supported compute attributes:

Fixedpolicy limits the attribute to a specific value. (This is our policy type of interest. It makes Databricks enforce certain compute attribute values for us).Forbiddenpolicy disallows an attribute to be used in the cluster configuration.Allowlistpolicy specifies a list of values we are allowed to choose from when setting an attribute.Blocklistpolicy is a list of disallowed values for a selected attribute.Regexpolicy is similar toAllowlistpolicy but allowed values must match a specified regular expression.Rangepolicy limits the attribute value to a specific range.Unlimitedpolicy could be used when you wish to make the attribute required or to set the default value in the UI.

Creating a new compute cluster policy

There are several ways of creating a compute cluster policy in Databricks. You could define a new policy from the ground up, clone and edit an existing policy, or use a policy family as your starting point (Databricks-provided policy template with pre-populated rules).

Here is a complete list of attributes supported in cluster policies.

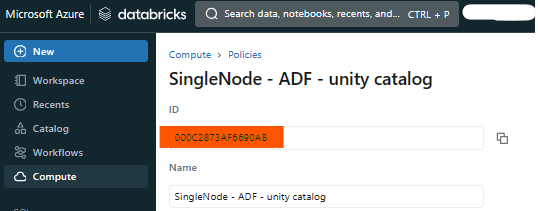

In our example, we will start from a blank custom policy, which we name Single Node - ADF - unity catalog.

We paste the following JSON in the policy definition field in the UI:

{

"spark_conf.spark.databricks.cluster.profile": {

"type": "fixed",

"value": "singleNode",

"hidden": true

},

"num_workers": {

"type": "fixed",

"value": 0,

"hidden": true

},

"data_security_mode": {

"type": "fixed",

"value": "SINGLE_USER",

"hidden": true

}

}

All of our options are of Fixed policy types. This is deliberate to ensure these attributes will be set on our cluster even if they are not passed by the caller (in this case: Azure Data Factory).

The first two options, spark_conf.spark.databricks.cluster.profile and num_workers, can be set in the Azure Data Factory and be omitted in the policy definition. As we have seen in the introduction, these two options alone would give us a functioning single Node cluster. However, the cluster would not be allowed to use Unity Catalog, because of incompatible data access mode.

What we need is to force a SINGLE_USER access mode (which is compatible with Unity Catalog), by setting a fixed data_security_mode cluster policy attribute. This is one of the cluster settings that we can't manipulate from the Azure Data Factory.

As our scenario is based around the idea of limiting cloud compute costs, you may consider limiting the size (and indirectly the cost) of the single node job cluster by setting this attribute to your policy (the max Datbricks Unit value here is arbitrary, you need to adjust it to your workloads):

{

"dbus_per_hour": {

"type": "range",

"maxValue": 4

}

}

In Step 2, we will need to reference the ID of the policy we have just created. This can be found in the UI.

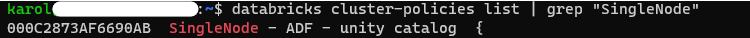

Alternatively, you can use a command line and Databricks CLI. Here, assuming your cluster policy name has SingleNode in its name:

databricks cluster-policies list | grep "SingleNode"

Step 2 - Linked Service Advanced Properties

Here we run into the same problem as before. Namely, ADF UI doesn't allow us to define a cluster policy in the basic default view of the Azure Databricks linked service!

Luckily for us policyId is an "advanced property" of the Databricks linked service in ADF. In a lot of cases, linked service connectors allow us to set more properties than those exposed to us by the ADF UI. These can be set directly in the linked service code definition or under Advanced section of the linked service UI (hence, we will call them "advanced properties" for the rest of this article).

Identifying linked service properties

To discover a full range of properties of a selected linked service in Azure Data Factory, you can consult this page in Azure Documentation. Let's inspect the Azure Databricks linked service ARM template definition:

"type": "AzureDatabricks",

"typeProperties": {

"accessToken": {

"type": "string"

},

"authentication": {},

"credential": {

"referenceName": "string",

"type": "CredentialReference"

},

"domain": {},

"encryptedCredential": {},

"existingClusterId": {},

"instancePoolId": {},

"newClusterCustomTags": {},

"newClusterDriverNodeType": {},

"newClusterEnableElasticDisk": {},

"newClusterInitScripts": {},

"newClusterLogDestination": {},

"newClusterNodeType": {},

"newClusterNumOfWorker": {},

"newClusterSparkConf": {},

"newClusterSparkEnvVars": {},

"newClusterVersion": {},

"policyId": {},

"workspaceResourceId": {}

}

As we can see the additional properties available via the ARM defintion do include the option to specify a policyId!

Setting the policyId advanced property value

The advanced property value can be set by editing the code of your Azure Databricks linked service in the Azure Data Factory:

and adding the policyId as property, together with its value, to the linked service JSON definition:

The advanced property will then be visible in the ADF UI when you inspect the linked service:

Alternatively, you can copy/paste this snippet into your linked service Advanced section, if you are afraid of messing up the code definition:

{

"properties": {

"typeProperties": {

"policyId": "<policy_id_here>"

}

}

End Result

Now, that our cluster policy has been defined in the Databricks workspace and its ID has been set in the Azure Data Factory linked service, the next time we use our linked service, a Databricks job will execute on a single-node job cluster with a correct access mode for Unity Catalog. 👍

We have also seen how, through a combination of compute policies in Databricks and advanced linked service properties, we could control the configuration of Databricks clusters beyond what the Azure Data Factory linked service, on its own, allows us.

The same method could also be used if you would like to use compute nodes with custom container images from ADF. This will the the topic of the next blog post!

Special thanks to Richard Nordström for the thorough review!